Trump trial sees new witnesses to close out first week of testimony

Prosecutors in former President Donald Trump's criminal trial in New York called two new witnesses to the stand on Friday, rounding out the first week of testimony.

Watch CBS News

Prosecutors in former President Donald Trump's criminal trial in New York called two new witnesses to the stand on Friday, rounding out the first week of testimony.

Multiple tornadoes were reported in Nebraska and a destructive storm moved from a largely rural area into the Omaha area.

A U.S. MQ-9 Reaper has crashed in Yemen. It may be the third $30 million drone shot down by the Houthis since November.

Russia has launched a barrage of missiles against Ukraine directed at energy facilities.

Police are cracking down at some university protests over Israel's war against Hamas in Gaza.

The father of one now faces the potential of a mandatory minimum prison sentence of up to 12 years.

Under the new law signed this week, ByteDance has nine to 12 months to sell the platform to an American owner, or TikTok faces being banned in the U.S.

Former Colorado paramedic Jeremy Cooper was sentenced to four years probation, 14 months work release and 100 hours of community service on Friday afternoon.

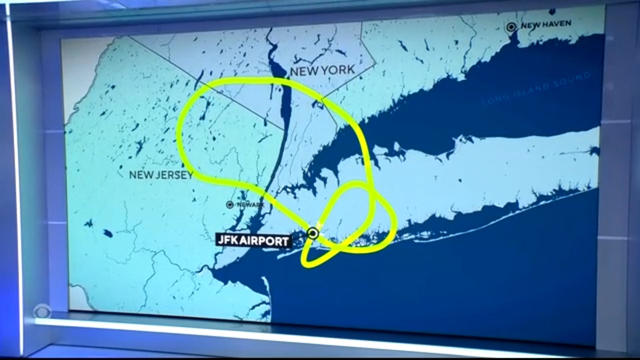

An emergency exit slide "separated" from a Delta flight Friday, prompting an emergency return to New York City's John F. Kennedy Airport.

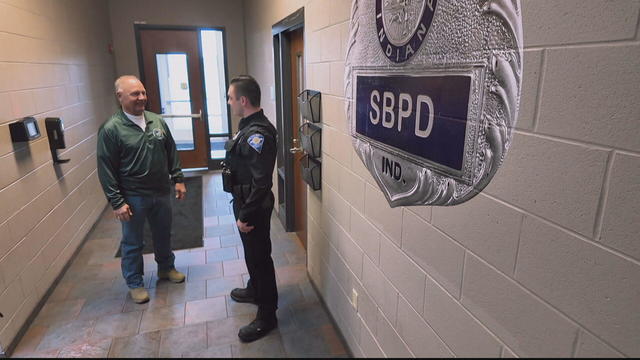

For more than two decades, retired Lt. Gene Eyster wondered what became of that boy he found abandoned in a cardboard box in an apartment hallway.

The income needed to join your state's top earners can vary considerably, from a low of $329,620 annually in West Virginia to $719,253 in Washington D.C.

About 7 in 10 retirees stop working before they turned 65. For many of them, it was for reasons beyond their control.

Harvey Weinstein is still serving 16 years in a California case, which is expecting an appeal that could use similar arguments to that of the overturned New York conviction.

First known HIV cases from a nonsterile injection for cosmetic reasons highlights the risk of unlicensed providers.

The court is considering whether Trump is entitled to broad immunity from criminal charges in the 2020 election case.

There are no cameras allowed in the court where he's being tried.

A federal judge rebuffed his request for a new trial in the civil suit that ended with an $83.3 million judgment for her.

"I am happy to debate him," President Biden said during an interview with Howard Stern.

Trump has in the past railed against absentee voting, declaring that "once you have mail-in ballots, you have crooked elections."

President Biden finds familiar and active allies for his reelection bid with labor union endorsements.

Police are cracking down at some university protests over Israel's war against Hamas in Gaza.

Sabreen Erooh had survived an emergency cesarean section after her mother was fatally wounded in an Israeli airstrike.

Senate Minority Leader Mitch McConnell appears on "Face the Nation" as pro-Palestinian protests roil American politics.

Dubai is known for using planes to help prompt precipitation over the region. But experts say it did not play a role in this week's historic downpour.

A new generation of deodorant products promise whole-body odor protection. Should you try one? Dermatologists share what to know.

Democrats who led probes into Trump's role in Jan. 6 Capitol riot expect to face arrest if he wins: "Anybody who has testified against him...should be worried."

Gold can be a smart bet for seniors — and that's not just due to the recent gold price uptick, either.

A HELOC can be a great borrowing option now, but the repayment process is unique. Here's what to know about it.

The average American is currently facing a hefty amount of credit card debt — but there are good ways to tackle it.

With a relatively low average monthly cost of living and a low crime rate, this little-known town has a lot to offer retirees according to one report.

About 7 in 10 retirees stop working before they turned 65. For many of them, it was for reasons beyond their control.

The income needed to join your state's top earners can vary considerably, from a low of $329,620 annually in West Virginia to $719,253 in Washington D.C.

A gold pocket watch recovered along with the body of John Jacob Astor, the richest passenger on the Titanic, is up for auction.

The median mortgage payment jumped to a record $2,843 in April, up nearly 13% from a year ago, a new analysis finds.

Here's how and when to watch Game 4 of the Boston Bruins vs. Toronto Maple Leafs Stanley Cup NFL Playoffs series.

Find out how and when to watch Game 4 of the Cavaliers vs. Magic NBA Playoffs series, even if you don't have cable.

Here's how and when to watch Game 4 of the Denver Nuggets vs. Los Angeles Lakers NBA Playoffs series.

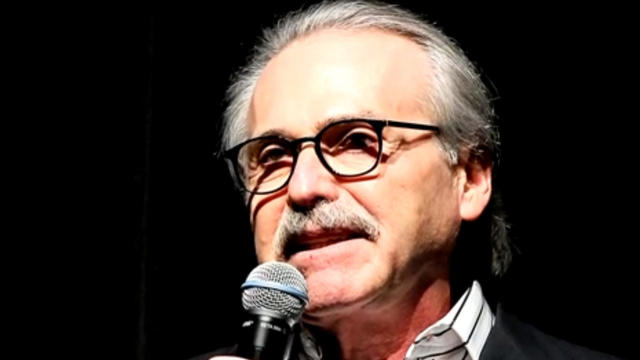

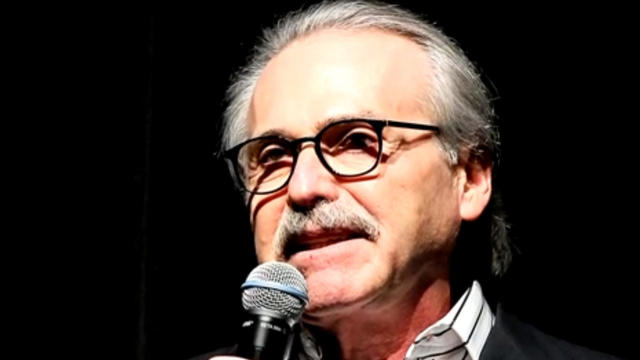

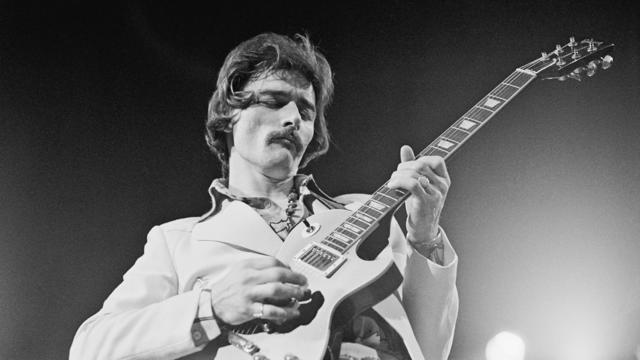

Ex-tabloid publisher David Pecker took the stand again Friday to detail the scheme he helped broker to suppress stories that could have hurt Donald Trump's 2016 campaign. Robert Costa has more.

More than a dozen tornadoes have touched down in three states – Texas, Oklahoma and Nebraska. Video shows a massive twister tearing across the interstate north of Lincoln, Nebraska, as large pieces of debris flew through the air. Omar Villafranca reports from Ennis, Texas.

Pro-Palestinian protests emerged on more college campuses Friday amid growing concerns about outside demonstrators not linked to schools where protests are being staged. Lilia Luciano has details.

Another American has been arrested in the Caribbean territory of Turks and Caicos after ammunition was allegedly found in his luggage. The Virginia man is the fourth American detained under similar circumstances in the last several months. Kris Van Cleave has more.

Ex-tabloid publisher David Pecker took the stand again Friday to detail the scheme he helped broker to suppress stories that could have hurt Donald Trump's 2016 campaign. Robert Costa has more.

More than a dozen tornadoes have touched down in three states – Texas, Oklahoma and Nebraska. Video shows a massive twister tearing across the interstate north of Lincoln, Nebraska, as large pieces of debris flew through the air. Omar Villafranca reports from Ennis, Texas.

Pro-Palestinian protests emerged on more college campuses Friday amid growing concerns about outside demonstrators not linked to schools where protests are being staged. Lilia Luciano has details.

Another American has been arrested in the Caribbean territory of Turks and Caicos after ammunition was allegedly found in his luggage. The Virginia man is the fourth American detained under similar circumstances in the last several months. Kris Van Cleave has more.

Secretary of State Antony Blinken met Friday with Chinese President Xi Jinping and other top officials in Beijing. Blinken raised concern that China is helping Russia to produce tanks, armored vehicles and weapons for its war in Ukraine. Nancy Cordes has more from the White House.

Sophia Bush filed for divorce from entrepreneur Grant Hughes in August 2023 after a year of marriage and started dating the former world champion soccer player afterward.

The Heisman Trophy was returned to former University of Southern California running back Reggie Bush Thursday after a 14-year dispute with the NCAA.

Reality star and designer Whitney Port discusses her new partnership with prenatal vitamin company Perelel and launches the "Fertility, Unfiltered" video series. She also talks for the first time about her personal decision to pursue IVF again after facing challenges in conceiving a second child.

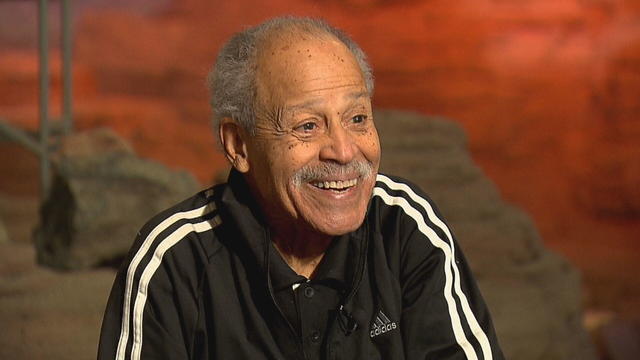

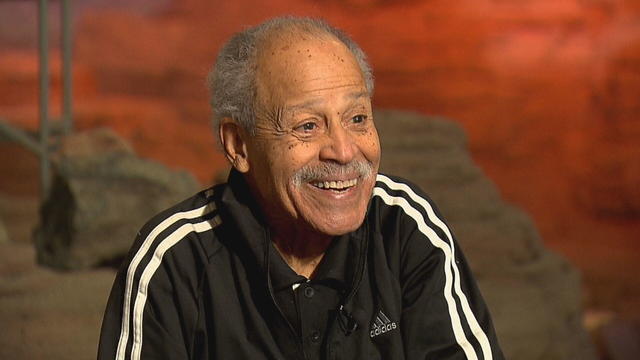

Reggie Bush reflects on the reinstatement of his Heisman Trophy after 14 years, discusses his ongoing defamation lawsuit against the NCAA and shares his insights on the future of college football. This marks his first in-depth interview since the Heisman Trust's decision to return the award.

Police bodycam video shows the police encounter that ended in the death of Frank Tyson, a Black man in Canton, Ohio. The officers arrested him after a car crash and restrained him facedown. Warning, the video is disturbing.

Democratic Congressman Ro Khanna joins The Takeout to discuss President Biden's approach to immigration, the economy & conflict in the Middle East. Khanna talks Biden's outlook in the 2024 election, America's electoral future & a teacher who inspired him.

Author and former Golf Channel broadcaster Lisa Cornwell talks to Major Garrett about her book, "Troublemaker," detailing her experience at the network. They discuss the state of the PGA compared to the LPGA.

CBS correspondent and author Jonathan Vigliotti joins The Takeout to discuss his new book "Before It's Gone," about how climate change is impacting small towns due to their lack of infrastructure and financial resources. He focuses on how communities rebuild after climate-related disasters and what lessons can be learned from their resilience.

For this edition of "The Takeout," Major Garrett speaks to filmmaker Brian Knappenberger about directing the nine-part documentary series, "Turning Point: The Bomb and the Cold War." The Netflix series offers a comprehensive look at the Cold War and its aftermath. Knappenberger says the aim of his documentary was to reflect and to also explore present-day tensions.

Analyst and author Ken Block joins The Takeout to discuss his new book, "Disproven." Block explains his hiring by the Trump campaign to search for voter fraud, his fact-driven investigation into claims of voter fraud and where the claims may have come from.

In 1961, Ed Dwight was selected by President John F. Kennedy to enter an Air Force training program known as the path to NASA's Astronaut Corps. But he ultimately never made it to space.

At his lowest moment, U.S. Army veteran and former teacher Billy Keenan found strength in his faith as he was reminded of his own resilience.

A surfing accident left New York teacher Billy Keenan paralyzed, but when he received a call from a police officer, his life changed.

The So Much To Give Inclusive Cafe in Cedars, Pennsylvania employs 63 people — 80% have a disability.

A mom was worried about what her son, who has autism, would do after high school. So she opened the So Much To Give cafe, a restaurant in Cedars, Pennsylvania, that employs people with disabilities – and helps them grow.

CBS Reports goes to Illinois, which has one of the highest rates of institutionalization in the country, to understand the challenges families face keeping their developmentally disabled loved ones at home.

As more states legalize gambling, online sportsbooks have spent billions courting the next generation of bettors. And now, as mobile apps offer 24/7 access to placing wagers, addiction groups say more young people are seeking help than ever before. CBS Reports explores what experts say is a hidden epidemic lurking behind a sports betting bonanza that's leaving a trail of broken lives.

In February 2023, a quiet community in Ohio was blindsided by disaster when a train derailed and authorities decided to unleash a plume of toxic smoke in an attempt to avoid an explosion. Days later, residents and the media thought the story was over, but in fact it was just beginning. What unfolded in East Palestine is a cautionary tale for every town and city in America.

In the aftermath of the Supreme Court striking down affirmative action in college admissions, CBS Reports examines the fog of uncertainty for students and administrators who say the decision threatens to unravel decades of progress.

CBS Reports examines the legacy of the U.S. government's terrorist watchlist, 20 years after its inception. In the years since 9/11, the database has grown exponentially to target an estimated 2 million people, while those who believe they were wrongfully added are struggling to clear their names.

Prosecutors in former President Donald Trump's criminal trial in New York called two new witnesses to the stand on Friday, rounding out the first week of testimony.

Under the new law signed this week, ByteDance has nine to 12 months to sell the platform to an American owner, or TikTok faces being banned in the U.S.

For more than two decades, retired Lt. Gene Eyster wondered what became of that boy he found abandoned in a cardboard box in an apartment hallway.

The father of one now faces the potential of a mandatory minimum prison sentence of up to 12 years.

Around 1 in 5 retail milk samples had tested positive for the bird flu virus, but further tests show it was not infectious.

Under the new law signed this week, ByteDance has nine to 12 months to sell the platform to an American owner, or TikTok faces being banned in the U.S.

The income needed to join your state's top earners can vary considerably, from a low of $329,620 annually in West Virginia to $719,253 in Washington D.C.

About 7 in 10 retirees stop working before they turned 65. For many of them, it was for reasons beyond their control.

With a relatively low average monthly cost of living and a low crime rate, this little-known town has a lot to offer retirees according to one report.

The China-based owner of TikTok is facing a new law that will force it to either sell the wildly popular video platform, or face a U.S. ban.

Prosecutors in former President Donald Trump's criminal trial in New York called two new witnesses to the stand on Friday, rounding out the first week of testimony.

Border officers have broad authority to search travelers' electronic devices without a warrant or suspicion of a crime.

The White House had been due to decide on the menthol cigarette rule in March.

A U.S. MQ-9 Reaper has crashed in Yemen. It may be the third $30 million drone shot down by the Houthis since November.

"I am happy to debate him," President Biden said during an interview with Howard Stern.

Around 1 in 5 retail milk samples had tested positive for the bird flu virus, but further tests show it was not infectious.

The White House had been due to decide on the menthol cigarette rule in March.

The discovery of drug-resistant bacteria in two dogs prompted a probe by the CDC and New Jersey health authorities.

First known HIV cases from a nonsterile injection for cosmetic reasons highlights the risk of unlicensed providers.

Are you using your smartwatch to the fullest? Here are 4 metrics doctors say can be useful to track beyond your daily step count.

Russia has launched a barrage of missiles against Ukraine directed at energy facilities.

The father of one now faces the potential of a mandatory minimum prison sentence of up to 12 years.

A U.S. MQ-9 Reaper has crashed in Yemen. It may be the third $30 million drone shot down by the Houthis since November.

Police are cracking down at some university protests over Israel's war against Hamas in Gaza.

The king took a break from public appearances nearly three months ago after he was diagnosed with an undisclosed type of cancer while he was undergoing treatment for an enlarged prostate.

Fans vote for the award winners — often leading to surprise winners and collaborative performances.

Sophia Bush filed for divorce from entrepreneur Grant Hughes in August 2023 after a year of marriage and started dating the former world champion soccer player afterward.

Preview: In an interview to be broadcast on "CBS News Sunday Morning" April 28, the Oscar-nominated actress also talks about her debut as a singer-songwriter with the album "Glorious."

Looking for a place to live in NYC? Zillow is now listing Frank Sinatra and Mia Farrow's former home on the Upper East Side.

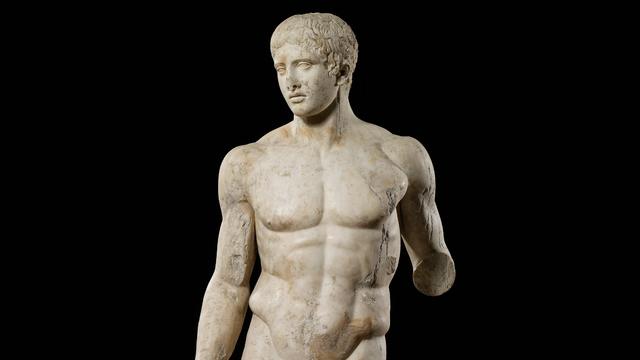

Italy's Culture Ministry has banned loans of works to the Minneapolis Institute of Art, following a dispute with the U.S. museum over an ancient marble statue believed to have been looted from Italy almost a half-century ago.

NYU Langone Health and Meta have developed a new type of MRI that dramatically reduces the time needed to complete scans through artificial intelligence. CBS News correspondent Anne-Marie Green reports.

The Federal Communications Commission voted to adopt net neutrality regulations, a reversal from the policy adopted during former President Donald Trump's administration. Christopher Sprigman, a professor at the New York University School of Law, joins CBS News with more on the vote.

From labor shortages to environmental impacts, farmers are looking to AI to help revolutionize the agriculture industry. One California startup, Farm-ng, is tapping into the power of AI and robotics to perform a wide range of tasks, including seeding, weeding and harvesting.

Are you using your smartwatch to the fullest? Here are 4 metrics doctors say can be useful to track beyond your daily step count.

Local and federal authorities face challenges in investigating and prosecuting romance scammers because the scammers are often based overseas. Jim Axelrod explains.

Bats have often been called scary and spooky but experts say they play an important role in our daily lives. CBS News' Danya Bacchus explains why the mammals are so vital to our ecosystem and the threats they're facing.

Pediatrician Dr. Mona Hanna-Attisha, whose work has spurred official action on the Flint water crisis, told CBS News that it's stunning that "we continue to use the bodies of our kids as detectors of environmental contamination." She discusses ways to support victims of the water crisis, the ongoing work of replacing the city's pipes and more in this extended interview.

Ten years ago, a water crisis began when Flint, Michigan, switched to the Flint River for its municipal water supply. The more corrosive water was not treated properly, allowing lead from pipes to leach into many homes. CBS News correspondent Ash-har Quraishi spoke with residents about what the past decade has been like.

According to the University of California, Davis, residential energy use is responsible for 20% of total greenhouse gas emissions in the U.S. However, one company is helping residential buildings reduce their impact and putting carbon to use. CBS News' Bradley Blackburn shows how the process works.

Emerging cicadas are so loud in one South Carolina county that residents are calling the sheriff's office asking why they can hear a "noise in the air that sounds like a siren, or a whine, or a roar." CBS News' John Dickerson has details.

Angel Gabriel Cuz-Choc was found hiding in a wooded area after his girlfriend and her 4-year-old daughter were found dead in Florida.

Dramatic bodycam footage shows the moment Florida deputies and K-9 dogs close in on a double murder suspect hiding in a thickly wooded area.

A new "48 Hours" investigation is looking into the death of a Kansas woman after she was found dying from a gunshot wound in 2019. The coroner initially ruled Kristen Trickle's death a suicide, but the local prosecutor said evidence on the scene didn't add up. "48 Hours" correspondent Erin Moriarty has the story.

A Bucharest court has ruled that a case against social media influencer Andrew Tate meets the required legal criteria and can go ahead, but there's no date set yet.

After Kristen Trickle died at her home in Kansas, her husband Colby Trickle received over $120,000 in life insurance benefits and spent nearly $2,000 on a sex doll supposedly to help him sleep.

Astronauts Barry Wilmore and Sunita Williams say they have complete confidence in the Starliner despite questions about Boeing's safety culture.

In 1961, Ed Dwight was selected by President John F. Kennedy to enter an Air Force training program known as the path to NASA's Astronaut Corps. But he ultimately never made it to space.

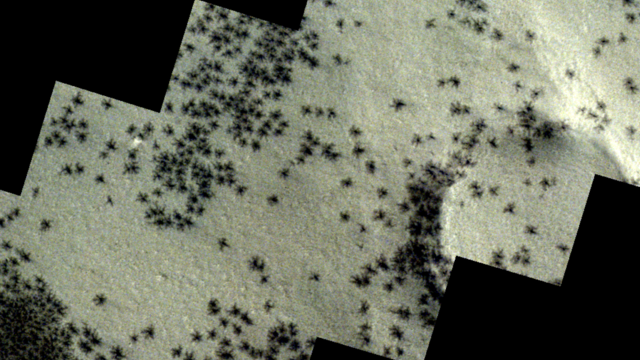

The creepy patterns were observed by the European Space Agency's ExoMars Trace Gas Orbiter.

The Shenzhou 18 crew will replace three taikonauts aboard the Chinese space station who are wrapping up a six-month stay.

In November 2023, NASA's Voyager 1 spacecraft stopped sending "readable science and engineering data."

A look back at the esteemed personalities who've left us this year, who'd touched us with their innovation, creativity and humanity.

The Francis Scott Key Bridge in Baltimore collapsed early Tuesday, March 26 after a column was struck by a container ship that reportedly lost power, sending vehicles and people into the Patapsco River.

When Tiffiney Crawford was found dead inside her van, authorities believed she might have taken her own life. But could she shoot herself twice in the head with her non-dominant hand?

We look back at the life and career of the longtime host of "Sunday Morning," and "one of the most enduring and most endearing" people in broadcasting.

Cayley Mandadi's mother and stepfather go to extreme lengths to prove her death was no accident.

For more than 200 days after Hersh Goldberg-Polin was taken hostage by Hamas on Oct.7, his mother hadn't heard his voice or seen video that proved he was alive. But that changed this week, when Hamas released a propaganda video showing Hersh – an Israeli-American – alive with his left arm amputated. CBS News' Debora Patta sat down with his mother, Rachel Goldberg-Polin, to ask about the "overwhelming and emotional" moment she saw that video and how she hopes all parties involved can reach a compromise to end the suffering.

A Delta Air Lines flight en route to Los Angeles was forced to circle back to New York's JFK International Airport Friday morning after it dropped an emergency exit slide.

Meet high school freshmen Joshua Small and Alexander Morris, a dynamic duo making a difference in their New York city community. The two long-time friends are teaming up to raise money to help young cancer patients and their families.

Another American has been arrested in the Caribbean territory of Turks and Caicos after ammunition was allegedly found in his luggage. The Virginia man is the fourth American detained under similar circumstances in the last several months. Kris Van Cleave has more.

With the clock ticking on TikTok, millions of users, including small businesses, are scrambling to figure out what to do next. Jo Ling Kent reports.